Some researchers have requested that I should have disclosed my codes. I provide my codes for major models. These are implemented in C++. I am not responsible for errors and readability of my codes. Please refrain from asking me about my codes.

100 training patterns are randomly generated, and 100 test are conducted for each. In steps of 10 training patterns, the results are output to standard error output. If you do not want to check them, comment out corresponding parts in main.cpp. The final results are output to standard output.

CHNN code

I provide the codes for complex-valued Hopfield neural networks (CHNN). Remark that only random generated data and impulsive noise are implemented. The following article are often referred for CHNN.

S. Jankowski, A. Lozowski, J. M. Zurada : “Complex-valued multistate neural associative memory”, IEEE Transactions on Neural Networks, Vol.7, No.6, pp.1491-1496 (1996)

- All the following codes should be downloaded.

main.cpp

C.h

cmatrix.h

cmatrix.cpp

cneuron.h

cneuron.cpp

cweight.h

cweight.cpp

chnn.h

chnn.cpp

pattern.h

pattern.cpp - The constants of main.cpp should be modified.

\(K\) is the resolution factor.

\(N\) is the number of neurons.

\(P\) is the number of training patterns. - Compile the codes by

g++ chnn.cpp cneuron.cpp cweight.cpp pattern.cpp cmatrix.cpp main.cpp -lm -O2

HHNN code

I provide the codes for hyperbolic-valued Hopfield neural networks (HHNN). Remark that only random generated data and impulsive noise are implemented. In hweight.cpp Hweight::projection() and Hweight::nrprojection() implement projection rule and noise robust projection rule, respectively. By default, normal projection rule is adopted. If you want to use noise robust projection rule, weight.projection() should be replaced with weight.nrprojection() in hhnn.cpp. For the details, the following articles should be referred.

M. Kobayashi: “Hyperbolic Hopfield Neural Networks with Directional Multistate Activation Function”, Neurocomputing, Vol.275, pp.2217-2226 (2018)

M. Kobayashi: “Noise Robust Projection Rule for Hyperbolic Hopfield Neural Networks”, IEEE Transactions on Neural Networks and Learning Systems, Vol.31, No.1, pp.352-356 (2020)

- All the following codes should be downloaded.

main.cpp

hmatrix.h

hmatrix.cpp

hneuron.h

hneuron.cpp

hweight.h

hweight.cpp

hhnn.h

hhnn.cpp

pattern.h

pattern.cpp - The constants of main.cpp should be modified.

\(K\) is the resolution factor.

\(N\) is the number of neurons.

\(P\) is the number of training patterns. - Compile the codes by

g++ hhnn.cpp hneuron.cpp hweight.cpp pattern.cpp hmatrix.cpp hyperbolic.cpp main.cpp -lm -O2

RHNN code

I provide the codes for rotor Hopfield neural networks (RHNN). Remark that only random generated data and impulsive noise are implemented. Rweight::projection() in rweight.cpp implements projection rule, thought noise robust projection rule is not implemented in this code. Refer the following article for noise robust projection rule.

M. Kobayashi: “Noise Robust Projection Rule for Rotor and Matrix-Valued Hopfield Neural Networks”, IEEE Transactions on Neural Networks and Learning Systems, Vol.33, No.2, pp.567-576 (2022)

- All the following codes should be downloaded.

main.cpp

matrix.h

matrix.cpp

rneuron.h

rneuron.cpp

rweight.h

rweight.cpp

rhnn.h

rhnn.cpp

pattern.h

pattern.cpp

vec2.h

vec2.cpp - The constants of main.cpp should be modified.

\(K\) is the resolution factor.

\(N\) is the number of neurons.

\(P\) is the number of training patterns. - Compile the codes by

g++ rhnn.cpp rneuron.cpp rweight.cpp pattern.cpp vec2.cpp matrix.cpp main.cpp -lm -O2

QHNN code

I provide the codes for quaternion-valued Hopfield neural networks (QHNN) with twin-multistate neurons. Only random generated data and impulsive noise are implemented. A quaternion-valued neuron has two multistate components. For \(N\) neurons, the dimension of training vectors is \(2N\). Multiplication of quaternions is not commutative. For a connection weight \(w\) and a neuron state \(z\), \(wz\) and \(zw\) are different in general. The QHNNs employing the former and later multiplications are referred as left and right QHNN, respectively. The left QHNN is standard. Refer the following article for these models.

M. Kobayashi: “Quaternionic Hopfield Neural Networks with Twin-Multistate Activation Function”, Neurocomputing, Vol.267, pp.304-310 (2017)

A model employing both left and right weights was proposed. It is referred as a QHNN with dual connections. Refer the following article for this model.

M. Kobayashi: “Quaternion-Valued Twin-Multistate Hopfield Neural Networks with Dual Connections”, IEEE Transactions on Neural Networks and Learning Systems, Vol.32, No.2, pp.892-899 (2021)

- All the following codes should be downloaded.

main.cpp

quaternion.h

quaternion.cpp

qmatrix.h

qmatrix.cpp

qneuron.h

qneuron.cpp

qweight.h

qweight.cpp

qhnn.h

qhnn.cpp

pattern.h

pattern.cpp - The constants of main.cpp should be modified.

\(K\) is the resolution factor.

\(N\) is the number of neurons. Remark that the dimension of training vectors is \(2N\), since a quaternion-valued neuron has two multistate components.

\(P\) is the number of training patterns.

QMode is selected from QHNN::L, QHNN::R and QHNN::D. QHNN::L and QHNN::R are the left and right QHNNs, respectively. QHNN::D is QHNN with dual connections. QMode is changed by modifying “define QMode (QHNN::L)” in main.cpp. - Compile the codes by

g++ qhnn.cpp qneuron.cpp qweight.cpp pattern.cpp quaternion.cpp qmatrix.cpp main.cpp -lm -O2

To modify these codes

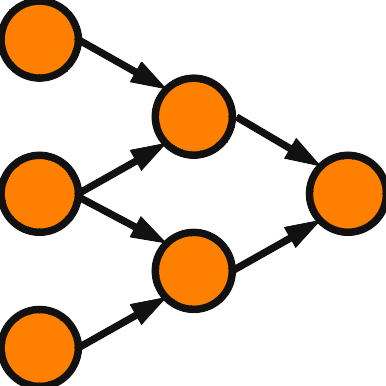

The constructions are similar for all the models. It is explained how to modify the codes taking the codes for complex-valued Hopfield neural networks as an example.

In main.cpp, \( K, N, P, C \) and \(S\) should be set.

- \(K\) is the resolution factor. The neuron state ranges from \(0\) to \(K-1\).

- \(N\) is the number of neurons.

- \(P\) is the number of training patterns. \(P\) must be less than \(N\) for CHNNs, since the projection rule is employed. However, \(P\) should be much smaller than N for confortable noise tolerance. A CHNN works well for \( P < 0.1N \).

- The noise rate of impulsive noise is \( 0.0, S, 2S, \cdots, CS \).

Training data can be replaced in chnn.cpp. In the constructor CHNN(), the training patterns are assigned to the variables “pattern” and “data”. “pattern” stores the training patterns as integers, which ranges from 0 to \(K-1\). “data” stores the training patterns as complex numbers and is used by the learning algorithm.

The learning algorithm can be modified in cweight.cpp. In this code, projection rule is implemented for the learning algorithm. The learning algorithm is called in the constructor CHNN() of chnn.cpp; The function “weight.projection()” should also be modified to use a different learning algorithm.

The noise model can be modified in cneuron.cpp. In this code, impulsive noise is implemented. The function “addnoise()” defines how to add noise.

The activation function can be modified in cneuron.cpp. The function “activation()” defines the activation function. It returns the neuron state as an integer between \(0\) and \(K-1\). The functions “getstate()” and “getv()” should also be modified.